An update about Lighthouse progress over the past two months.

TL;DR

It's been almost two months since our last update, sorry for the radio silence. We haven't been slacking, we've just been writing code instead of blog posts! Over the past month we have:

- Exceeded 1,000 stars on the sigp/lighthouse Github repository 🎉.

- Shipped five releases.

- Implemented the core consensus logic for the upcoming Altair upgrade and passed the tests.

- Begun work on updating to the new Altair networking specification.

- Produced a prototype of a fully proof-of-stake Ethereum.

- Improved our block production times by up-to six times!

- Onboarded a new developer (@macladson) and signed up another to start in late-April.

- Rebooted our graphical user interface (GUI) project, expect a full-featured GUI in Q2 2021!

Over the coming weeks we'll be continuing our work on Altair, working on the Rayonism merge hackathon and, of course, continuing our never-ending quest to optimize Lighthouse validator rewards and resource consumption.

Also, please keep your Lighthouse nodes updated. In each release we always state which users are recommended for each release. If you're included in the recommended users, please update for the sake of your own profits and/or the security of the network.

Altair

Work on the upcoming Altair hard fork has begun in earnest, with Michael, Sean and Pawan all chipping away at tasks in the main Altair PR (#2279).

Part of the difficulty in implementing Altair comes from committing to a representation for core types like the BeaconBlock and BeaconState now that their fields are subject to change. After hunting for off-the-shelf solutions and finding nothing suitable, we've created a new Rust library called SuperStruct which uses Rust's procedural macros to generate different variants of types, and useful functions on them. Armed with this library we have implemented the Altair consensus code in a modular way, and now have all of the spec tests passing. We've grown the library as we've iterated, and we think it has put us in a good position to deal with future forks in Lighthouse.

The remaining tasks before we can merge Altair support into stable are related to components outside of core consensus. The op pool needs to be updated to optimise for Altair's modified attestation incentives, and the beacon chain needs to start collecting and aggregating proofs related to the new light client feature. We hope to complete these tasks in the next few weeks so that we can run Altair testnets and start building better fork-aware Merge prototypes.

Merge Testnets

In late-March we produced a rough-and-ready prototype of a "merge" testnet with Lighthouse and Geth (running in "Catalyst" mode). We published it as a tweet storm.

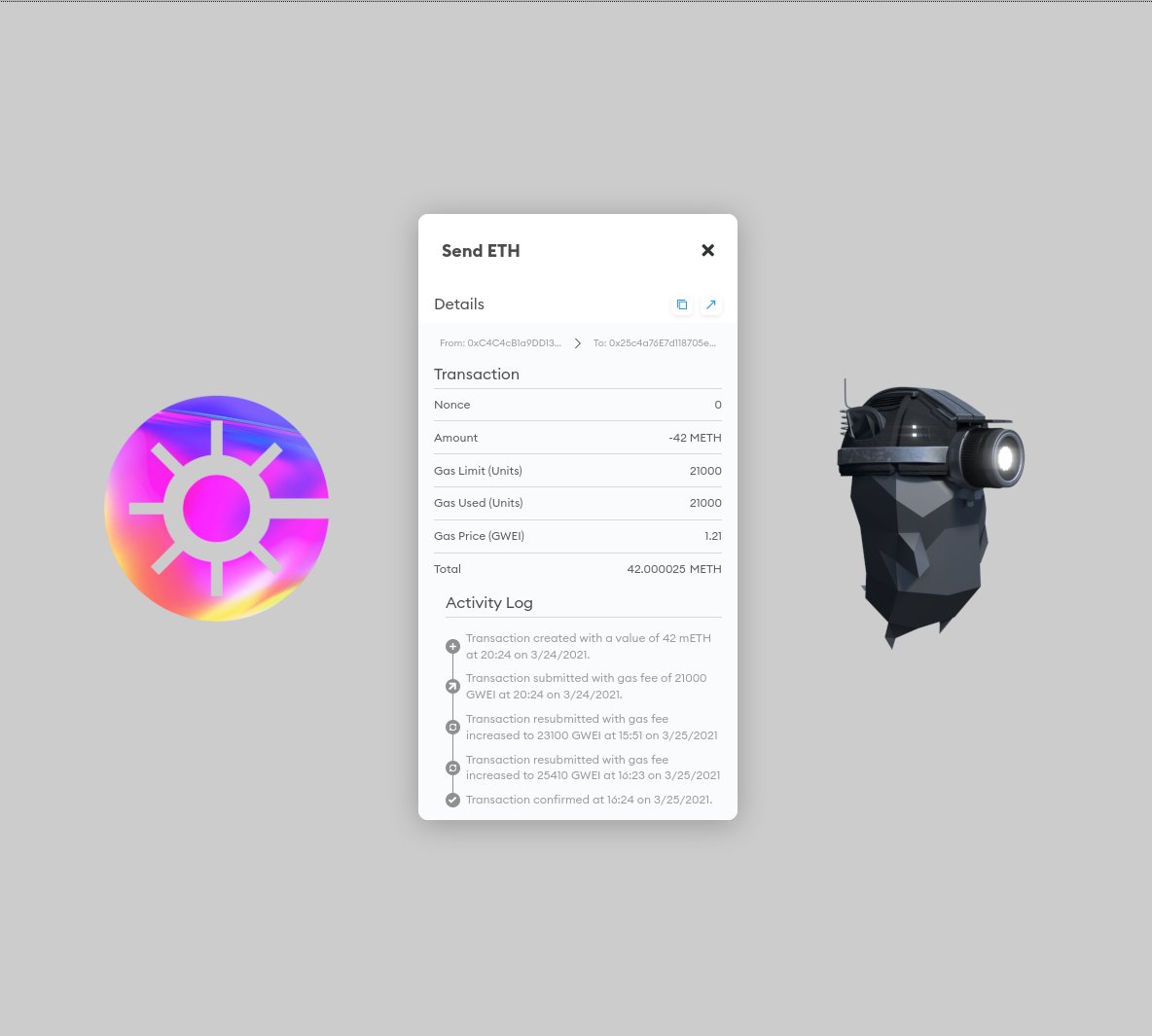

With this prototype we produced a single 42 mETH transaction to the Lighthouse donate address. mETH is worthless testnet ETH, akin to gETH. Amusingly, I didn't notice the naming collision with a prevalent and devastating illicit stimulant. Oops.

As mentioned in the tweet, this isn't supposed to demonstrate that we're immediately ready to deprecate proof-of-work, instead it should serve as a signal the Lighthouse has started development towards this end.

We'll continue this work into the exciting new Rayonism project over the coming weeks and months. Expect lots more progress with user-facing merge testnets. I'm optimistic that we'll see a multi-client merge testnet in May.

I also recommend reading Finalized #23 by Danny Ryan where he talks about renaming some terms:

- Instead of "Eth2", use "consensus layer"

- Instead of "Eth1", use "execution layer" (Danny uses the term "application layer" in his blog, however he now uses "execution layer" after some confusion arose)

On the Lighthouse side we'll start to move away from Eth1/Eth2 towards execution/consensus, however it's going to take us a while since those terms are heavily baked into our code, APIs and documentation. Naming is hard.

Memory Usage Optimization

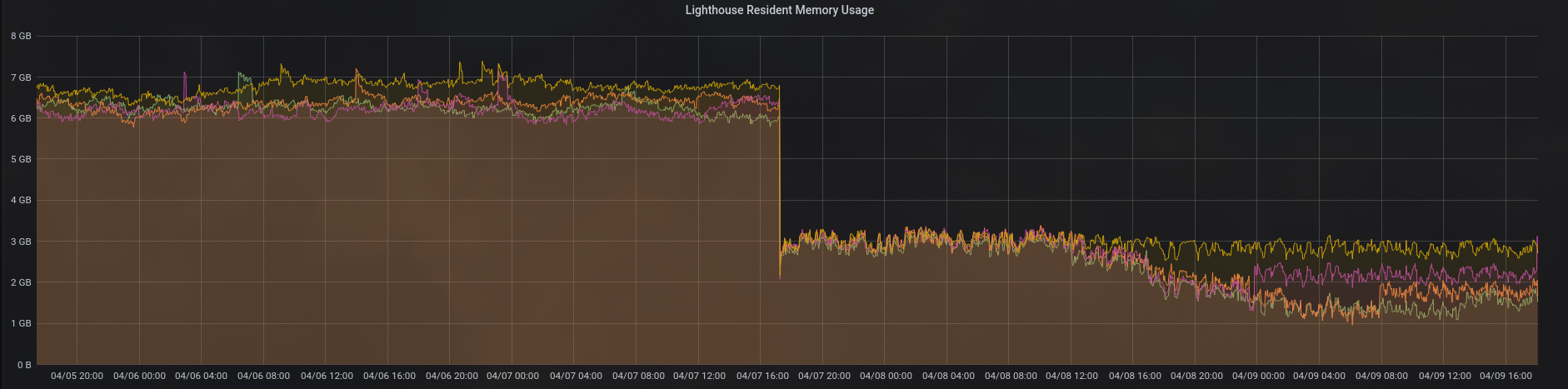

We've made some great progress with reducing the memory footprint of Lighthouse on Linux. As you can see on the graph below, we've managed to reduce the memory footprint of our massive Pyrmont nodes from 6 GB to 3 GB (or less!).

We've mentioned these changes before in Update 34 (contains background

info for the rest of this paragraph). However, since then we've made more

progress with the M_MMAP_THRESHOLD flag. By fixing the mmap threshold, we use

anonymous memory-maps for large allocations instead of fragmenting our arenas.

We hope to get this included in a release in the coming two weeks. Read more and follow our progress on Tune GNU Malloc (#2299).

You can see our deprecated (yet interesting) experiments with jemalloc in

Use Jemalloc by Default (#2288).